Roy's notes: Difference between revisions

No edit summary |

|||

| (88 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= Page move = | |||

After borrowing space on Malin's wiki for some years, further edits will be done on the [https://wiki.karlsbakk.net/index.php/Roy%27s_notes my new wiki] so that next time the wiki goes down after something strange happened, she won't get a ton of mesages from me, but I'll have to fix it myself - oh well ;) | |||

= LVM, md and friends = | |||

== Linux' Logical Volume Manager (LVM) == | == Linux' Logical Volume Manager (LVM) == | ||

| Line 39: | Line 45: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

After the LV is created, a filesystem can be placed on it unless it is meant to be used directly. The application for direct use include swap space, VM storage and certain database systems. Most of these will, however, work on filesystems too, although my testing has shown that on swap space, there is a significant performance gain for using dedicated storage without a filesystem. As for filesystems, most Linux users use either ext4 or xfs. Personally, I | After the LV is created, a filesystem can be placed on it unless it is meant to be used directly. The application for direct use include swap space, VM storage and certain database systems. Most of these will, however, work on filesystems too, although my testing has shown that on swap space, there is a significant performance gain for using dedicated storage without a filesystem. As for filesystems, most Linux users use either ext4 or xfs. Personally, I generally use XFS these days. See my notes below on filesystem choice. | ||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

| Line 47: | Line 53: | ||

Then just edit /etc/fstab with correct data and run mount -a, and you should be all set. | Then just edit /etc/fstab with correct data and run mount -a, and you should be all set. | ||

==== Create LVM cache ==== | |||

LVMcache is LVM's solution to caching a slowish filesystem on spinning rust with the help of much faster SSDs. For this to work, use a separate SSD or a mirror or two (just in case) an add the new disk/md dev to the same VG. In this case, we use a small mirror, md1. LVM is not able to cache to a disk or partition outside the volume group. | |||

<syntaxhighlight lang="bash"> | |||

vgextend data /dev/md1 | |||

Physical volume "/dev/md1" successfully created. | |||

Volume group "data" successfully extended | |||

</syntaxhighlight> | |||

Thn create two LVs, one for the data (contents of files) and one for the metadata (filenames, directories, attributes etc). Typically, the metadata part won't need to be very large. 100M should usually do unless you have ekstremely many small files. I guess there are ways to calculate it, but again, 1GB should do for everyone (or was that 640k?). As for the data cache, I chose to use 40G here for a 15TB RAID. It will only cache what actively use anyway, so no need for overkill. | |||

<syntaxhighlight lang="bash"> | |||

lvcreate -L 1G -n _cache_meta data /dev/md1 | |||

lvcreate -L 100G -n _cache data /dev/md1 | |||

# Some would want --cachemode writeback, but if paranoid, I wouldn't recommend it. Metadata or data can be easily corrupted in case of failure. Most filesystems will however recover from this these days since they use journaling. It ''really'' speeds up things. | |||

lvconvert --type cache-pool --cachemode writethrough --poolmetadata data/_cache_meta data/_cache | |||

lvconvert --type cache --cachepool data/_cache data/data | |||

</syntaxhighlight> | |||

That should be it. If you benchmarked the disk before this, you should try again and you'll probably get a nice surprise. If not, well, see below how to turn this off again :) | |||

==== Disabling LVM cache ==== | |||

If you want to change the cached LV, such as growing og shrinking or otherwise, you'll have to disable caching first and then re-enable it. There's no quick-and-easy fix, but you just do as you did when enabling in the first place. | |||

Forst, disable caching for the LV. This will do it cleanly and remove the LVs earlier created for caching the main device. | |||

<syntaxhighlight lang="bash"> | |||

lvconvert --uncache data/data | |||

</syntaxhighlight> | |||

Then, since you now have a VG with an empty PV in it, earlier used for caching, I'd recommend removing this for now to avoid expanding onto that as well. Since they're in the same VG, this might happen and if you get so far as to extend the lv and the filesystem on it, and this filesystem is XFS or similar filesystems without possibilities of reducing the size, you have a wee problem. | |||

Just remove that PV from the VG | |||

<syntaxhighlight lang="bash"> | |||

vgreduce data /dev/md1 | |||

</syntaxhighlight> | |||

The PV is still there, but pvs should now show it not connected to a VG | |||

==== Growing devices ==== | ==== Growing devices ==== | ||

| Line 72: | Line 120: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

After the LV has grown, run ''xfs_growfs'' (xfs) or ''resize2fs'' (ext4) to make use of the new data. | ==== Growing filesystems ==== | ||

After the LV has grown, run ''xfs_growfs'' (xfs) or ''resize2fs'' (ext4) to make use of the new data. To grow the filesystem to all available space on ext4, run the below command. To partly grow the filesystem or to shrink it or otherwise, please see the manuals. | |||

The filesystem can be mounted when this is run. If you for some reason get an '''access denied''' error when running this as root or with sudo, it's likely caused by a filesystem error. To fix this, unmount the filesystem first and run '''fsck -f /dev/my_vg/thicklv''' and remount it before retrying to extend it. | |||

<syntaxhighlight lang="bash"> | |||

resize2fs /dev/my_vg/thicklv | |||

</syntaxhighlight> | |||

Similarly, with XFS, but note that xfs_growfs doesn't take the device name, but the filesystem's mount point. In the case of XFS, also note that the filesystem '''''must''''' be mounted to be grown. This, in contrast to how it was in the old days when filesystems had to be unmounted to be grown. | |||

<syntaxhighlight lang="bash"> | |||

xfs_growfs /my_mountpoint | |||

</syntaxhighlight> | |||

==== Rename system VG ==== | |||

Some distros create VG names like those-from-a-sysadmin-with-a-well-developed-paranoia-not-to-hit-a-duplicate. Debian, for instance, uses names like "my-full-hostname-vg". Personally, I don't like these, since if I were to move a disk or vdisk around to somewhere else, I'd make sure not to add a disk named 'sys' to an existing system. If you do, most distros will refuse to use the VGs with duplicate names, resulting in the OS not booting until either one is specified by its UUID or just one removed. As I'm aware of this, I stick to the super-risky thing of all system VGs named 'sys' and so on. | |||

If you do this, keep in mind that it may blow up your computer and take your girl- or boyfriend with it or even make either of them pregnant, allowing Trump to rule your life and generally make things suck. You're on your own! | |||

<syntaxhighlight lang="bash"> | |||

# Rename the VG | |||

vgrename my-full-hostname-vg sys | |||

</syntaxhighlight> | |||

Now, in /boot/grub/grub.cfg, change the old lv path from something like '/dev/mapper/debian--stretch--machine--vg-root' to your new and fancy '/dev/sys/root. Likewise, in /etc/fstab, change occurences of '/dev/mapper/debian--stretch--machine--vg-' (or similar) with '/dev/sys/'. Here, this was like this - the old ones commented out. | |||

<syntaxhighlight lang="fstab"> | |||

#/dev/mapper/debian--stretch--box--vg-root / ext4 errors=remount-ro,discard 0 1 | |||

/dev/sys/root / ext4 errors=remount-ro,discard 0 1 | |||

# /boot was on /dev/vda1 during installation | |||

UUID=myverylonguuidwithatonofgoodinfoinit /boot ext2 defaults 0 2 | |||

#/dev/mapper/debian--stretch--box--vg-swap_1 none swap sw 0 0 | |||

/dev/sys/swap_1 none swap sw 0 0 | |||

</syntaxhighlight> | |||

Update /etc/initramfs-tools/conf.d/resume with similar data for swap. | |||

You might want to run 'update-initramfs -u -k all' after this, but I'm not sure if it's needed. | |||

Now, pray to the nearest god(s) and give it a reboot and it should (probably) work. | |||

After reboot, run '''swapoff -a''', then '''mkswap /dev/sys/swap_1''' (or whatever it's called on your system) and '''swapon -a''' again. You may want to give it another reboot or two for kicks, but it should work well even without that. | |||

=== Migration tools === | === Migration tools === | ||

| Line 90: | Line 181: | ||

'''''NOTE:''''' ''Even though it worked well for us, '''always''' keep a good backup before doing this. Things may go wrong, and without a good backup, you may be looking for a hard-to-find new job the next morning''. | '''''NOTE:''''' ''Even though it worked well for us, '''always''' keep a good backup before doing this. Things may go wrong, and without a good backup, you may be looking for a hard-to-find new job the next morning''. | ||

==== mdadm workarounds ==== | |||

===== Migrate from a mirror (raid-1) to raid-10 ===== | |||

Create a mirror | |||

<syntaxhighlight lang="bash"> | |||

mdadm --create /dev/md0 --level=1 --raid-devices=2 /dev/sdb /dev/sdc | |||

</syntaxhighlight> | |||

LVM (pv/vg/lv) on md0 (see above), put a filesystem on the lv and fill it with some data. | |||

Now, some months later, you want to change this to raid-10 to allow for the same amount of redundancy, but to allow more disks into the system. | |||

<pre> | |||

mdadm --grow /dev/md0 --level=10 | |||

mdadm: Impossibly level change request for RAID1 | |||

</pre> | |||

So - no - doesn't work. But - we're on | |||

Since we're on lvm already, this'll be easy. If you're using filesystems directly on partitions, you can do this the same way, but without the pvmove part, using rsync or whateever you like instead. I'd recommend using lvm for for the new raid, which should be rather obvious from this article. Now plug in a new drive and create a new raid10 on that one. If you two new drives, install both. | |||

<syntaxhighlight lang="bash"> | |||

# change "missing" to the other device name if you installed two new drives | |||

mdadm --create /dev/md1 --level=10 --raid-devices=2 /dev/vdd missing | |||

</syntaxhighlight> | |||

Now, as described above, just vgextend the vg, adding the new raid and run pvmove to move the data from the old pv (residing on the old raid1) to the new pv (on raid10). Afte rpvmove is finished (which may take awile, see above), just | |||

<syntaxhighlight lang="bash"> | |||

vgreduce raidtest /dev/md0 | |||

pvremove /dev/md0 | |||

mdadm --stop /dev/md0 | |||

</syntaxhighlight> | |||

…and your disk is free to be added to the new array. If the new raid is running in degraded mode (if you created it with just one drive), better don't wait too long, since you don't have redundancy. Just mdadm --add the devs. | |||

If you now have /dev/mdstat telling you your raid10 is active and have three drives, of which one is a spare, it should look something like this | |||

<pre> | |||

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] | |||

md1 : active raid10 vdc[3](S) vdb[2] vdd[0] | |||

8380416 blocks super 1.2 2 near-copies [2/2] [UU] | |||

</pre> | |||

Just mdadm --grow --raid-devices=3 /dev/md1 | |||

''RAID section is not complete. I'll write more on migraing from raid10 to raid6 later. It's not straight forward, but easier than this one :)'' | |||

=== Thin provisioned LVs === | === Thin provisioned LVs === | ||

| Line 110: | Line 250: | ||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

# Now, create a volume with a virtual (-V) size of half a terabyte named thin_pool, but using the thinpool (-T) named thin_pool for storage | # Now, create a volume with a virtual (-V) size of half a terabyte named thin_pool, but using the thinpool (-T) named thin_pool for storage | ||

lvcreate -V 500G -T | lvcreate -V 500G -T my_vg/thin_pool --name thinvol | ||

</syntaxhighlight> | </syntaxhighlight> | ||

The new volume's device name will be /dev/thinvol. Now, create a filesystem on it, add to fstab and mount it. The '''df''' command will return available space according to the virtual size (-V), while lvs will show how much data is actually used on each of the thinly provisioned volumes. | The new volume's device name will be /dev/thinvol. Now, create a filesystem on it, add to fstab and mount it. The '''df''' command will return available space according to the virtual size (-V), while lvs will show how much data is actually used on each of the thinly provisioned volumes. | ||

== Filesystem dilemmas == | |||

=== XFS or ext4 or something else === | |||

The choice of filesystem varies for your use. Distros such as RHEL/CentOS has moved to XFS by default from v7. Debian/Ubuntu still sticks to ext4, like most other. I'll discuss my thoughts below on ext4 vs XFS and leave the other filesystems for later. | |||

==== ext4 ==== | |||

ext4 is probably the most used filesystem on linux as of writing. It's rock stable and has been extended by a lot of extensions since the original ext2. It still retains backward compatibility, being able to mount and fsck ext2 and ext3 with the ext4 driver. However, it doesn't handle large filesystems very well. If a filesystem is created for <16TiB, it cannot be grown to anything larger than 16TiB. This may have changed lately, though. One other issue is handling errors. If a large filesystem (say 10TiB) is damaged, an fsck may take several hours, leading to long downtime. When I refer to ext4 further in this text, the same applies to ext2 and ext3 unless otherwise specified. | |||

==== XFS ==== | |||

XFS, originally developed by SGI some time in the bronze age, but has been worked on heavily after that. RHEL and Centos version 7 and forward, uses XFS as the default filesystem. It is quick, it scales well, but it lacks at least two things ext4 has. It cannot be shrunk (not that you normally need that, but nice if you have a typo or you need to change things). Also, it doesn't allow for automatic fsck on bootup. If something is messed up on the filesystem, you'll have to login as root on the console (not ssh), which might be an issue on some systems. The fsck equivalent, xfs_repair, is a *lot* faster than ext4's fsck, though. | |||

==== ZFS ==== | |||

ZFS is like a combination of RAID, LVM and a filesystem, supporting full checksumming, auto-healing (given sufficient redundancy), compression, encryption, replication, deduplication (if you're brave and have a ''ton'' of memory) and a lot more. It's a separate chapter and needs a lot more talk than paragraph here. | |||

==== btrfs ==== | |||

btrfs, pronounced "butterfs" or "betterfs" or just spelled out, is a filesystem that mimics that of ZFS. It has been around for 10 years or so, but hasn't yet proven to be really stable. Following the progress of it for those years, I've found it to be a nice toy with which to play, but not to be used in production. Again, others may say otherwise, but these are just my words. | |||

== Low level disk handling == | == Low level disk handling == | ||

This section is for low-level handling of disks, regardless of storage system elsewhere. These apply to mdraid, lvmraid and zfs and possibly other solutions, with the exception of individual drives on hwraid (and maybe fakeraid), since there, the drives are hidden from Linux (or whatever OS). | This section is for low-level handling of disks, regardless of storage system elsewhere. These apply to mdraid, lvmraid and zfs and possibly other solutions, with the exception of individual drives on hwraid (and maybe fakeraid), since there, the drives are hidden from Linux (or whatever OS). | ||

=== S.M.A.R.T. and slow drives === | |||

Modern (that is, from about 1990 or later) have S.M.A.R.T. (or just smart, since it's easier to type) monitoring built in, meaning the disk's controller is monitoring itself. This is handy and will ideally tell you in advance that a drive is flakey or dying. Modern (2010+) smart monitoring is more or less the same. It can be trusted about 50% of the time. Usually, if smart detects an error, it's a real error. I don't think I've seen it reporting a false positive, but others may know better. | |||

==== smartmontools ==== | |||

First, install smartmontool and run smartclt -H /dev/yourdisk (/dev/sda or something) to get a health report. Then perhaps run smartctl -a /dev/yourdisk and look for 'current pending sectors' This should be zero. Also check the temperature, which normally should be much above 50. | |||

==== single, slow drive ==== | |||

What may happen, is smart not seeing anything and life goes on like before, only slower and less prouductive, without telling anyone. If you stubler over this issue, you'll probably see it during a large rsync/zfs send/receive or a resync/rebuild/resilver of the raid/zpool. During this, it feels like everything is slow and your RAID has turned into a RAIF (redundant array of inexpensive floppies). To check if you have a single drive messing up it all, check with '''iostat -x 2''', having 2 being the delay between each measurement. Interrupt it with ctrl+x. If there's a rougue drive there, it should stand out like sdg below | |||

avg-cpu: %user %nice %system %iowait %steal %idle | |||

0.38 0.00 1.75 0.00 0.00 97.87 | |||

Device r/s w/s rkB/s wkB/s rrqm/s wrqm/s %rrqm %wrqm r_await w_await aqu-sz rareq-sz wareq-sz svctm %util | |||

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 | |||

sdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 | |||

sdc 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 | |||

sdd 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 | |||

sde 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 | |||

sdf 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 | |||

sdg 3.50 0.00 2352.00 0.00 0.00 0.00 0.00 0.00 14306.29 0.00 13.85 672.00 0.00 285.71 100.00 | |||

sdh 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 | |||

In ZFS land, you should be able to get similar data with '''zpool iostat -v <pool>''' | |||

Now you know the bad drive (sdg), pull it from the raid with '''mdadm --fail /dev/mdX /dev/sdX'''. Now test the drive's performance manually, just simply: | |||

# hdparm -t /dev/sdg | |||

/dev/sdg: | |||

Timing buffered disk reads: 2 MB in 5.37 seconds = 381.46 kB/sec | |||

Bingo - nothing you'd want to keep. For a good drive, it should be something like 100-200MB/s | |||

PS: Keep in mind that you have a degraded RAID by now in case you had a spare waiting for things to happen. | |||

Try to replace the cable and/or try the disk on another port/controller. If the result from hdparm persists, it's probably a bad cable | |||

So, then, just psycially locate the drive, pull it out, take out the discs and magnets and recycle the rest. | |||

=== "Unplug" drive from system === | === "Unplug" drive from system === | ||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

# echo 1 > /sys/bus/scsi/devices/ | # Find the device unit's number with something like | ||

find /sys/bus/scsi/devices/*/block/ -name sdd | |||

# returning /sys/bus/scsi/devices/3:0:0:0/block/sdd here, | |||

# meaning 3:0:0:0 is the SCSI device we're looking for. | |||

# Given this is the correct device, and you want to 'unplug' it, do so by | |||

echo 1 > /sys/bus/scsi/devices/3:0:0:0/delete | |||

</syntaxhighlight> | </syntaxhighlight> | ||

| Line 128: | Line 330: | ||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

# for | # Make a list of controllers and send a rescan message to each of them. It won't do anything | ||

# for those where nothing has changed, but it will show new drives where they have been added. | |||

for host_scan in /sys/class/scsi_host/host*/scan | |||

do | |||

echo '- - -' > $host_scan | |||

done | |||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Fail injection with debugfs === | === Fail injection with debugfs === | ||

[https://lxadm.com/Using_fault_injection https://lxadm.com/Using_fault_injection] | [https://lxadm.com/Using_fault_injection https://lxadm.com/Using_fault_injection] | ||

== mdadm stuff == | |||

=== Resync === | |||

To resync a raid, that is, check, run | |||

echo check > /sys/block/$dev/md/sync_action | |||

where $dev is something like md0. If you have several raids, something like this should work | |||

for dev in md{0,1} | |||

do | |||

echo repair > /sys/block/$dev/md/sync_action | |||

done | |||

Also useful in a one-liner like | |||

for dev in md{0,1} ; do echo repair > /sys/block/$dev/md/sync_action ; done | |||

Different raids on different drives will do this in parallel. If the drives are shared somehow with partitions or similar, the check or repair will wait for the other to finish before going on. | |||

As for repair vs check, [https://raid.wiki.kernel.org/index.php/RAID_Administration this article] describes it well. I stick to using repair unless it's something rare where I don't want to touch the data. | |||

=== Spare pools === | |||

In the old itmes, mdadm supported only a dedicated spare per md device, so if working with a large set of drives (20? 40?), where you'd want to setup more raid sets to increase redundancy, you'd dedicate a spare to each of the raid sets. This has changed (some time ago?), so in these modern, heathen days, you can change mdadm.conf, usually placed under /etc/mdadm, and add 'spare-group=somegroup' where 'somegroup' is a string identifying the spare group. After doing this, run '''update-initramfs -u''' and reboot and add a spare to one of the raid sets in the spare group, and md will use that or those spares for all the raidsets in that group. | |||

As some pointed out on #linux-raid @ irc.freenode.net, this feature is very badly documented, but as far as I can see, it works well. | |||

==== Example config ==== | |||

<syntaxhighlight lang="bash"> | |||

# Create two raidsets | |||

mdadm --create /dev/md1 --level=6 --raid-devices=6 /dev/vd[efghij] | |||

mdadm --create /dev/md2 --level=6 --raid-devices=6 /dev/vd[klmnop] | |||

# get their UUID etc | |||

mdadm --detail --scan | |||

ARRAY /dev/md/1 metadata=1.2 name=raidtest:1 UUID=1a8cbdcb:f4092350:348b6b80:c054d74c | |||

ARRAY /dev/md/2 metadata=1.2 name=raidtest:2 UUID=894b1b7c:cb7eba70:917d6033:ea5afd2b | |||

# Put those lines into /etc/mdadm/mdadm.conf and and add the spare-group | |||

ARRAY /dev/md/1 metadata=1.2 name=raidtest:1 spare-group=raidtest UUID=1a8cbdcb:f4092350:348b6b80:c054d74c | |||

ARRAY /dev/md/2 metadata=1.2 name=raidtest:2 spare-group=raidtest UUID=894b1b7c:cb7eba70:917d6033:ea5afd2b | |||

# update the initramfs and reboot | |||

update-initramfs -u | |||

reboot | |||

# add a spare drive to one of the raids | |||

mdadm --add /dev/md1 /dev/vdq | |||

# fail a drive on the other raid | |||

mdadm --fail /dev/md2 /dev/vdn | |||

# check /proc/mdstat to see md2 rebuilding with the spare from md1 | |||

</syntaxhighlight> | |||

=== Recovery === | |||

The other day, I came across a problem a user had on #linux-raid@irc.freenode.net. He had an old Qnap NAS that had died and tried plugging the drives in to his Linux machine and got various errors. The two-drive RAID-1 did not come up as it should, '''dmesg''' was full of errors from one of the drives (both connected on USB) and so forth. | |||

We went on and started by taking down the machine and just connecting one of the drives. I had him check what was assembled with | |||

cat /proc/mdstat | |||

That returned /proc/md127 assembled, but LVM didn't find anything. So running a manual scan | |||

pvscan --cache /dev/md127 | |||

This made linux find the PV, VG and a couple of LVs, but probably because the mdraid was degraded, the LVs were deactivated, according to '''lvscan'''. Activating the one with a filesystem manually | |||

lvchange -a y /dev/<vg>/<lv> | |||

Substitute <vg> and <lv> with the names listed by vgs and lvs. | |||

This worked, and the filesystem on /dev/<vg>/<lv> could be mounted correctly. | |||

=== Renaming a RAID === | |||

''Not finished'' | |||

<!-- | |||

Back around 2001, I asked on the linux-raid list for disk tagging, not default back then, but supported, and thought of the possibility of disk #1 getting replaced with disk #1 from another raid because of a messup. After this, the hostname was added to the raid name in the superblock. So when a RAID is created, it is named "hostname:raidname", where the raidname is either a number (0,1...) or a name. | |||

For instance, running this on my VM named "raidtest": | |||

mdadm --create --level=1 --raid-devices=2 /dev/md/stuff /dev/sd[st] | |||

mdadm --detail --scan now shows this | |||

ARRAY /dev/md/stuff metadata=1.2 name=raidtest:stuff UUID=c4cdf69f:393a28b1:47648ed3:726613de | |||

Now, you might want to change this name for some reason, and it's not supported under "grow", so what to do… The name is, after all, local to the disk, since it's there to avoid messing up a raid (or two) after a disk mixup. | |||

mdadm --assemble /dev/md/stuff --name 'someotherhost:stuff' --update=homehost /dev/sd[st] | |||

--> | |||

=== The badblock list === | |||

'''md''' has an internal badblock list (BBL), added around 2010, to list the bad blocks on the disk to avoid using those. While this might sound like a good idea, the badblock list is updated in case of a read error, which can be caused by several things. To make things worse, the BBL is replicated to other drives when a drive fails or the array is grown. So if you start out with sda and sdb and sdb suffers some errors, nothing wrong, md will read from sda, but flag those sectors on sdb as bad. So after a while, perhaps sda dies and gets replaced, with the same content, including the BBL. Then, at some point you might to replace sdb and it, too gets the BBL. So it sticks - forever. Also, there's no real point of keeping a BBL list, since the drive has its own and even if a sector error occurs, the array will have sufficient redundancy to recover from that (unless you use RAID-0 which you shouldn't). If a drive finds a bad sector, it'll be reallocated by the drive itself without the user's/admin's/system's knowledge. If a sector can't be reallocated, it means the drive is bad and should be replaced. | |||

There's no official way to remove it and although you can assemble it with the no-bbl option, this will only work if there's an empty BBL. Also, this will require downtime. As far as I can see, the only way to do this currently, is with a wee hack that should work. Just replace the names of mddev/diskdev with your own. | |||

<syntaxhighlight lang="bash"> | |||

#!/bin/bash | |||

mddev="/dev/md0" | |||

diskdev="/dev/sda" | |||

mdadm $mddev --fail $diskdev --remove $diskdev --re-add $diskdev --update=no-bbl | |||

</syntaxhighlight> | |||

If there's a BBL on the drive already, replace the --update part to 'force-no-bbl'. This is not documented anywhere as per se, but it works. | |||

I haven't found a way to turn this off permanently, but there may be something coming around when the debate on the linux-raid mailing list closes up on this. There's a bbl=no in mdadm.conf, but that's only for creation. I will continue extensive testing for this. | |||

== Tuning == | |||

While trying to compare a 12-disk RAID (8TB disks) to a hwraid, mdraid was slower, so we did a few things to speed things up: | |||

While testing with [https://linux.die.net/man/1/fio fio], monitor /sys/block/md0/md/stripe_cache_active, and see if it's close to /sys/block/md0/md/stripe_cache_size. If it is, double the size of the latter | |||

<syntaxhighlight lang="bash"> | |||

echo 4096 > /sys/block/md0/md/stripe_cache_size | |||

</syntaxhighlight> | |||

Continue until stripe_cache_active stabilises, and then you've found the limit you'll need. You may want to double that for good measure. | |||

(to be continued) | |||

== Links == | |||

Below are a few links with useful info | |||

* [https://raid.wiki.kernel.org/index.php/Irreversible_mdadm_failure_recovery Irreversible mdadm failure recovery] | |||

= ZFS = | |||

ZFS can do a lot of things most filesystems or volume managers can't. It checksums all data and metadata and so long that the system uses ECC enabled memory (RAM), it will have complete control over the whole data set, and when (not if) an error occurs, it'll fix the issue without even throwing a warning. To fix the issue, you'll obviously need sufficient redundancy (mirrors or RAIDzN). | |||

== ZFS send/receive between machines == | |||

ZFS has a send/receive mechanism that allows for snapshots to be sent over the wire to a receiving end. This can be a full filesystem, or an incremental change since last send/reveive. In a WAN scenario, you'll probably want to use VPN for this. On a controlled network, sending in cleartext is also possible. You may as well use ssh, but keep in mind that ssh was never designed to be used as a fullblown vpn solution and is just too slow or the task with large amounts of data. You may, though, use mbuffer, if on a loal LAN. | |||

<pre> | |||

# Allow traffic from the sending IP in whatever firewall interface you're using (if you're using a firewall at all, that is) | |||

ufw allow from x.x.x.x | |||

# | |||

# Start the receiver first. This listens on port 9090, has a 1GB buffer, | |||

and uses 128kb chunks (same as zfs): | |||

mbuffer -s 128k -m 1G -I 9090 | zfs receive data/filesystem | |||

</pre> | |||

To be continued one day… See [https://everycity.co.uk/alasdair/2010/07/using-mbuffer-to-speed-up-slow-zfs-send-zfs-receive/ this] for some more. I'll get back with details on it. | |||

= General Linux system control = | |||

== Add a new CPU (core) == | |||

If working with virtual machines, adding a new core can be useful if the VM is slow and the load is multithreaded or multiprocess. To do this, add a new CPU in the hypervisor (be it KVM or VMware or Hyper-V or whatever). Some hypervisors, like VMware, will activate it automatically if open-vm-tools is installed. Please note that open-vm-tools is for VMware only. I don't know of any such thing for KVM or other hypervisors (although I beleive VirtualBox has its own set of tools, but not as a package). If this doesn't work, run | |||

<pre> | |||

echo 1 > /sys/devices/system/cpu/cpuX/online | |||

</pre> | |||

Where X is the CPU number you want to add. You can 'cat /sys/devices/system/cpu/cpuX/online' to check if it's online. | |||

= Mount partitions on a disk image = | |||

Sometimes you'll have a disk image, either from recovering a bad drive after hours (or days or weeks) of ddrescue, and sometimes you just have a disk image from a virtual machine where you want to mount the partitions inside the image. There are three main methods of doing this, which are more or less synonmous, but some easier than the other. | |||

== Basics == | |||

A disk image is usually like a disk, consisting of either a large filesystem, a PV or a partition table with one or partitions onto which resides a filesystem, a pv, swap or something else. If it's partitioned, and you want your operating system to mount a filesystem or allow it to see whatever LVM stuff lies on it, you need to isolate the partitions. | |||

== Manual mapping == | |||

In the oldern days, this was done manually, something like this | |||

<pre> | |||

root@mymachine:~# fdisk -l /dev/vda | |||

Disk /dev/vda: 25 GiB, 26843545600 bytes, 52428800 sectors | |||

Units: sectors of 1 * 512 = 512 bytes | |||

Sector size (logical/physical): 512 bytes / 512 bytes | |||

I/O size (minimum/optimal): 512 bytes / 512 bytes | |||

Disklabel type: dos | |||

Disk identifier: 0xc37b7ea5 | |||

Device Boot Start End Sectors Size Id Type | |||

/dev/vda1 * 2048 50333311 50331264 24G 83 Linux | |||

/dev/vda2 50333312 52426369 2093058 1022M 5 Extended | |||

/dev/vda5 50333314 52426369 2093056 1022M 82 Linux swap / Solaris | |||

root@mymachine:~# | |||

</pre> | |||

To calculate the start and end of each partition, you just take the start sector and multiply it by the sector size (512 bytes here) and you have the offset in bytes. After this, it's merely an losetup -o <offset> /dev/loopX <nameofimagefile> and you have the partition mapped to your /dev/loopX (having X being something typically 0-255. After this, just mount it or run pvscan/vgscan/lvscan to probe whatever lvm config is there, and you should be able to mount it like any other filesystem. | |||

== kpartx == | |||

'''kpartx''' does the same as above, more or less, just without so much hassle. Most of it should be automatic and easy to deal with, except it may fail to disconnect the loopback devices sometimes, so you may have to '''losetup -d''' them manually. See the manual, '''kpartx (8)'''. Please note that '''partx''' works similarly and I'd guess the authors of the two tools are arguing a lot of which one's the best. | |||

== guestmount == | |||

'''guestmount''' is something similar again, but also supports file formats like '''qcow2''' and possibly other, proprietary filesystems like '''vmdk''' and '''vdi''', but don't take my word for it - I haven't tested. Again, see the manual or just read up on the description [http://ask.xmodulo.com/mount-qcow2-disk-image-linux.html?fbclid=IwAR3snTeZvGzp9PLdX11EQhCfOpux6I93CiJ3A2pUomkkRLly9Xo4851mkTY here]. It seems rather trivial. | |||

= Linux on laptops = | |||

== Wifi with HP EliteBook 725/745 on Ubuntu == | |||

The HP EliteBook 725/745 and similar are equipped with a BCM4352 wifi chip. As with a lot of other stuff from Broadcom, this lacks an open hardware description, so thus no open driver exist. The proper fix, is to replace the NIC with something with an open driver, but again, this isn't always possible, so a binary driver exists. In ubuntu, run, somehow connect the machine to the internet and run | |||

<pre> | |||

sudo apt-get update | |||

sudo apt-get install bcmwl-kernel-source | |||

sudo modprobe wl | |||

</pre> | |||

If you can't connect the machine to the internet, download the needed packages on another machine and put it on some usb storage. These packages should suffice: | |||

<pre> | |||

# apt-cache depends bcmwl-kernel-source | |||

bcmwl-kernel-source | |||

Depends: dkms | |||

Depends: linux-libc-dev | |||

Depends: libc6-dev | |||

Conflicts: <bcmwl-modaliases> | |||

Replaces: <bcmwl-modaliases> | |||

</pre> | |||

Then install manually with '''dpkg -i file1.deb file2.deb''' etc | |||

After this, ip/ifconfig/iwconfig shouldd see the new wifi nic, probably '''wlan0''', but due to a [https://bugs.launchpad.net/ubuntu/+source/broadcom-sta/+bug/1343151 old, ignored bug], you won't find any wifi networks. This is because the driver from Broadcom apparently does not support interrupt remapping, commonly used on x64 machines. To turn this off, change '''/etc/default/grub''' and change the line '''GRUB_CMDLINE_LINUX_DEFAULT="quiet splash"''', adding intremap=off to the end before the quote: '''GRUB_CMDLINE_LINUX_DEFAULT="quiet splash intremap=off"'''. Save the file and run '''sudo update-grub''' and reboot. After this, wifi should work well. | |||

== Wifi with HP Compaq Presario CQ57 on Ubuntu == | |||

The HP Compaq Presario CQ57 uses the RT5390 wifi chipset. This has been supported for quite some time now, and I was quite surprised to find it not working on a laptop a friend was having. The driver loaded correctly, but Ubuntu insisted of the laptop being in '''flight mode'''. Checking '''rfkill''', it showed me two NICs. | |||

<pre> | |||

# rfkill list all | |||

0: phy0: Wireless LAN | |||

Soft blocked: no | |||

Hard blocked: yes | |||

1: hp-wifi: Wireless LAN | |||

Soft blocked: yes | |||

Hard blocked: no | |||

</pre> | |||

Turning rfkill on and off resulted in nothing much, except some error messages in the kernel log (dmesg) | |||

<pre> | |||

[fr. jan. 24 15:10:20 2020] ACPI Error: Field [B128] at bit offset/length 128/1024 exceeds size of target Buffer (160 bits) (20170831/dsopcode-235) | |||

[fr. jan. 24 15:10:20 2020] | |||

Initialized Local Variables for Method [HWCD]: | |||

[fr. jan. 24 15:10:20 2020] Local0: 00000000a34b7928 <Obj> Integer 0000000000000000 | |||

[fr. jan. 24 15:10:20 2020] Local1: 00000000b0aa6865 <Obj> Buffer(8) 46 41 49 4C 04 00 00 00 | |||

[fr. jan. 24 15:10:20 2020] Local5: 00000000a9811efd <Obj> Integer 0000000000000004 | |||

[fr. jan. 24 15:10:20 2020] Initialized Arguments for Method [HWCD]: (2 arguments defined for method invocation) | |||

[fr. jan. 24 15:10:20 2020] Arg0: 0000000078957d1b <Obj> Integer 0000000000000001 | |||

[fr. jan. 24 15:10:20 2020] Arg1: 00000000725c9c6e <Obj> Buffer(20) 53 45 43 55 02 00 00 00 | |||

[fr. jan. 24 15:10:20 2020] ACPI Error: Method parse/execution failed \_SB.WMID.HWCD, AE_AML_BUFFER_LIMIT (20170831/psparse-550) | |||

[fr. jan. 24 15:10:20 2020] ACPI Error: Method parse/execution failed \_SB.WMID.WMAD, AE_AML_BUFFER_LIMIT (20170831/psparse-550) | |||

</pre> | |||

After upgrading BIOS and testing different kernels, I was asked to try to unload the '''hp_wmi''' driver. I did, and things changed a bit, so I tried blacklisting it, creating the file '''/etc/modprobe.d/blacklist-hp_wmi.conf''' with the following content | |||

<pre> | |||

# Blacklist this - it doesn't work! | |||

blacklist hp_wmi | |||

</pre> | |||

I gave the machine a reboot and afte that, the '''hp-wifi''' entry was gone in the rfkill output, and things works. | |||

= Raspberry pi = | |||

I couldn't quite decide if the raspberry pi should be a separate section or part of Linux, but hell, it can run more than Linux, although 99,lots% of people just uses Linux on them. This is a small list of things to know about them; things not on the front page of the manual or perhaps hidden even better. | |||

== Which pi? == | |||

I just setup three raspberry pi machines and although some are quite different, like v1 to vEverythingelse or the Pi zero versions to the normal ones, not all of us remember how to distinguish between them, and sometimes they're in a nice 3d printed (or otherwise) chassis or otherwise hidden. So, how to check three different ones: | |||

<pre> | |||

root@home-assistant:~ $ cat /proc/device-tree/model ; echo | |||

Raspberry Pi 2 Model B Rev 1.1 | |||

root@green-pi:~ $ cat /proc/device-tree/model ; echo | |||

Raspberry Pi 3 Model B Rev 1.2 | |||

roy@yellow-pi:~ $cat /proc/device-tree/model ; echo | |||

Raspberry Pi 4 Model B Rev 1.1 | |||

</pre> | |||

While this works well, for the latter, the pi4, it doesn't tell how much memory I have. It comes in variants of 1, 2 and 4GB, and this is the latter. | |||

For a more detailed list, the command '''awk '/^Revision/ {sub("^1000", "", $3); print $3}' /proc/cpuinfo''' will give you a revision number, detailing the type of pi, who produced it etc. Please see [https://elinux.org/RPi_HardwareHistory https://elinux.org/RPi_HardwareHistory] for a full table. | |||

== Monitoring == | |||

'''vcgencmd''' from '''/opt/vc/bin''', but symlinked to '''/usr/bin''' so it's available in path, is useful for fetching hardware info about the pi. For example, measuring the temperature | |||

<pre> | |||

root@yellow-pi:~# vcgencmd measure_temp | |||

temp=63.0'C | |||

</pre> | |||

The command is generally badly documented and parts of it seems outdated for newer hardware. Here's the output from a Raspberry pi 4 with 4GB RAM | |||

<pre> | |||

roy@yellow-pi:~ $ vcgencmd get_mem arm | |||

arm=948M | |||

roy@yellow-pi:~ $ vcgencmd get_mem gpu | |||

gpu=76M | |||

</pre> | |||

= BIOS upgrade from Linux = | |||

Generally, BIOS upgrades have been moved to the BIOS itself these days. This saves a lot of work and quite possibly lives after those who earier rammed their heads through walls, now can upgrade directly from the internet instead of using obscure operating systems best avoided. Sometimes, however, one must use these nevertheless. This is a quick run-through on your options. | |||

== DOS == | |||

Most non-automatic BIOS upgrades are installed from DOS. Doing a BIOS upgrade this way, is generally non-problematic. Just install a USB thingie with [https://www.freedos.org/ FreeDOS], copy your file(s) onto the thing and boot on it. The commandline is the same as old MS/DOS, except a wee bit more userfriendly, but not a lot. | |||

== Windows == | |||

Some macahines, like laptops from '''HP''', may have BIOS upgrades that are made to be installed from Windows and won't run in FreeDOS or MS/DOS, instead throwing an error, saying '''This program cannot be run in DOS mode'''. This means you'll have to boot on a Windows rescue disc and do the job from there. Googling this, tells you it's quite easy, you just choose 'make rescue disc' from Windows, and it's all good, except if you don't run Windows, that is. To remedy this problem, do as follows: | |||

=== Download Windows === | |||

Since you don't run Windows and possibly don't have a spouse or friend around with such unhealthy habits either, you need to get it. It's quite easy - just google 'download windows' and it'll send you to [https://www.microsoft.com/en-us/software-download/windows10ISO somewhere like this]. | |||

=== Burn it! === | |||

Now it's time to burn the installer on a DVD ROM. You'll need a double-layered one, since the OS is > 4.7GiB, but I'm sure you have a ton of those lying around. Now, just place your optical medium in your optical drive and burn, baby, burn! | |||

=== Or make a USB thing? === | |||

Not a lot of machines have optical drives these days, so you may want to use an USB pen drive or something instead. This is easy, just make the USB bootable with the Windows application Microsoft has made for this. Unfortunately, this only runs on Windows, and unlike stuff like Debian, where the '''iso''' file can be dd'ed directly onto a USB drive to make it bootable, the Windows '''iso'''s don't come with this luxury. There are lots of methods to make bootable USB things from iso files out there, but the only thing most of them have in common, is that they generally don't work. | |||

==== WoeUSB ==== | |||

After hours of swearing, it's nice to come across software like [https://github.com/slacka/WoeUSB WoeUSB]. It may not be perfect, it relies on a bunch of GUI stuff you'll never need and so on, but it '''''works''''', and that's the important part! Just clone the repo and RTFM and it's all nice. It's slow, but hell, getting a windows machine and/or an optical drive might be slower. | |||

WoeUSB does nothing fancy, but it '''does''' work. It will create a filesystem, FAT32 or NTFS (better use NTFS, FAT32 has its limits). It creates normal filesystems and so on. Just make sure to copy in the BIOS update file, usually an exe file, to run when Windows is up and running. | |||

==== Install BIOS ==== | |||

Boot on the Windows USB thing and choose 'repair computer' and then 'command prompt'. Find the install file, and if you're lucky, you'll find it needs a 32bit OS, just like I did. Since the 64bit version doesn't include 32bit compatibility in the installer, revert to downloading the 32bit version of windows and start over. Pray to your favourite god(s) not to get across such machines again - it might help. | |||

= 3D printer stuff = | |||

Below are a number of not very ordered paragraphs about 3D printer related stuff, mostly based on my experience. Their content may be interesting or perhaps practical knowledge, but then, that depends on the reader. | |||

== 3D printer related introductions on youtube or elsewhere == | |||

First off, I'd like to mention some youtube videos that are all (or mostly) a nice start if you're new to 3D printers. | |||

=== [https://www.youtube.com/watch?v=nb-Bzf4nQdE&list=PLDJMid0lOOYnkcFhz6rfQ6Uj8x7meNJJx Thomas Salanderer's 10-video series on 3D printing basics] === | |||

Thomas Salanderer is a an experienced 3D printer user/admin/fixer/etc and he has a lot of interesting videos. Some accuse him for being a [Prusa https://www.prusa3d.com/] fanboy, which he quite obviously is, but that doesn't stop him from making interesting and somewhat neutral videos. The series walks you thought the first steps of how things work and so on and hopefully opens more doors than anything else I've seen. | |||

=== [https://www.youtube.com/watch?v=rp3r921DBGI Teaching Tech's "3D printer tuning reborn"] === | |||

Teaching Tech is, like Salanderer or even more, good pedagogically and explains a lot. They (or he) do a bunch of testing and describing pros and cons with different things, just like other youtubers in the sme business. The mentioned video shows how to tune a 3D printer after you have first learned the basics, and leans on a webpage made to help you out without too much manual work. | |||

This was a short list, but I guess it'll grow over time. | |||

== Hydrophilic filament == | |||

Most popular filament types, including PLA, PETG, PP or PA (Polyamide, normally known as Nylon) and virtualy any filament type named [https://www.matterhackers.com/news/filament-and-water poly-something] (and thus with an acronym of P-something) are hydrophilic (also (somewhat incorrectly) called hydroscopic), meaning so long they're dryer than the atmosphere around them, they'll do their best to suck out the atmosphere's humidity. This is why most filament are shrink-wrapped with a small bag of silica gel in it. If such filament is exposed for normal humid air for some time, it'll become very hard to use. Most of my experience is with PLA, which can handle a bit (depending on make), but still not a lot. With PLA, if you end up with prints where the filament seems not to stick, it may help with a higher temperature. Otherwise, the filament must be [https://all3dp.com/2/how-to-dry-filament-pla-abs-and-nylon/ baked]. If you don't want to use your oven, try something like a [https://www.jula.no/catalog/hjem-og-husholdning/kjokkenmaskiner/mattilberedningsapparater/frukt-sopptorker/frukt-sopptorker-001641/#tab02 fruit and mushroom dryer]. Then get a good, sealble plastic box and add something like half a kilogram of [https://www.ebay.com/sch/sis.html?_nkw=1KG+Orange+Replacement+Desiccant+Indicating+Silica+Gel+Beads+for+Drawers%2C+Camera&_id=131938742628&&_trksid=p2057872.m2749.l2658 silica gel] to an old sock, tie it and put it in the your new dry box. | |||

Please note that ABS is hydrophobic, so you won't have to worry too much there. Also, remember that PA/Nylon is extremely hydrophilic. Even a couple of days in normal air humidity can ruin it completely and will require baking. | |||

== Upgrading the firmware on an Ender 3 == | |||

This is about upgrading the firmware, and thus also flashing a bootloader to the Creality Ender 3 3D printer. Most of this is well documented on several place, like o [https://www.youtube.com/watch?v=fIl5X2ffdyo the video from Teaching tech] and elsewhere, but I'll just summarise some issues I had. | |||

=== Using an arduino Nano as a programmer === | |||

Quick note before you read on. If you have an Ender 3 controller version 1.1.5 or newer (or perhaps even a bit older), a CR-10S or some other more or less modern printers or controllers, they come with a bootloader installed already, so don't bother flashing one. The one that's in there already, should work well. So if you have one of these, just skip this and jump to the [[Roy's_notes#Flashing_the_printer|next section]]. | |||

However, the normal CR-10 (without the S), Ender 3 and a few other printers from Creality come without a bootloader, so you'll need to flash one before upgrading the firmware. Well, strictly speakning, you don't need one, you can save those 8kB or so for other stuff and use a programmer to write the whole firmware, but then you'll need to wire up everything every time you want a new firmware, so in my world, you '''do''' need the bootloader, since it's easier. | |||

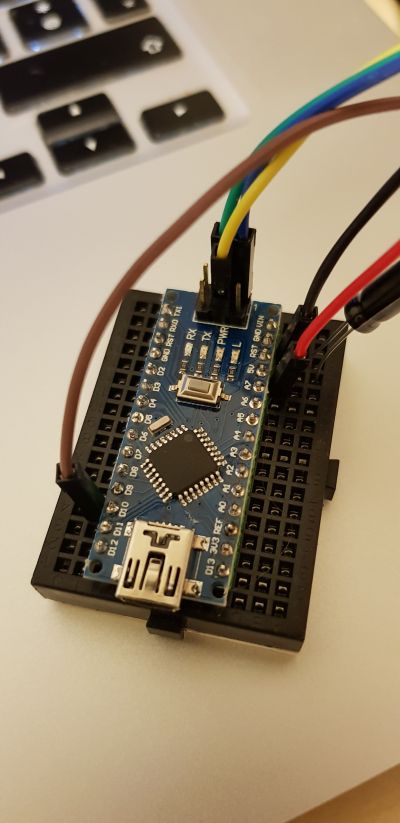

To install a bootloader, you'll need a programmer, and I didn't have one available. Having some el-cheapo china-copies of Arduinos around, I read I could use one and tried so. Although the docs mostly mention the Uno etc, it's just as simple with the Nano copy. | |||

So, grab an arduino, plug it to your machine with a USB cable, and, using the Arduino IDE, use the ArduinoISP sketch there (found under Examples) to burn the software needed for the arduino to work as a programmer. When this is done, connect a 10µF capacitor between RST and GND to stop it from resetting when used as a programmer. Connect the MOSI, MISO and SCK pins from the ICSP block (that little isolated 6-pin block on top of the ardu). See [https://www.arduino.cc/en/Tutorial/ArduinoISP here] for a pinout. Then find Vcc and GND either on the ICSP block or elsewhere. Some sites say that on some boards you shouldn't use the pins on the ICSP block for this. I doubt it matters, though. Then connect another jumper wire from pin 10 on the ardu to work as the reset pin outwards to the chip being programmed (that is, on the printer). The ICSP jumper block pin layout is the same on the printer and on the ardu, and probably elsewhere. Sometimes [https://xkcd.com/927/ standardisation] actually works… | |||

When it's all wired up, change the setup in the Arduino IDE to use the Sanguino board (which may need to be installed there), the Atmega 1284 16MHz board with the programmer set to "Arduino as ISP". Also make sure to choose the right serial port. When all is done, choose Tools | Burn programmer and it should take a few seconds to finish. | |||

[[File:Ardu-nano-as-programmer.jpg|400px]] | |||

== Flashing the printer == | |||

When the boot loader is in place, possibly after some wierd errors from during the process, the screen on the 3d printer will go blank and it'll behave like being bricked. This is ok. Now disconnect the wires and connect the USB cable between computer and printer. If you haven't installed support for the Sanguino board, that is, the variant of the 1284-chip and surrounding electronics that is the core of the, you need to install it first. In the Arduino IDE, choose the Tools / Boards / Boards manager boot option and search for Sanguino. After this, you should find the Sanguino or Sanguino 1284p in the boards menu. After this, you should be able to communicate with the printer's controller. Download the [https://www.th3dstudio.com/knowledgebase/th3d-unified-firmware-package/ TH3D unified firmware], making sure it's the last version. Uncompress the zip and with Finder/Explorer/whateversomething'scalledonlinux, browse to '''TH3DUF_R2/Firmware/TH3DUF_R2.ino''' and open it. It should open directly in the Android IDE. Keep in mind that the names TH3DUF_R2 and TH3DUF_R2.ino will vary between versions, but you get the point. Now configure it for your particular needs. You'll find most of this in the Configuration.h tab (that's really a file). Most of the other files won't be necessary unless you're doing some more advanced stuff, like replacing th controller with something non-standard or similar, but then again, that's another chapter (or book, even). The video mentioned above describes this well. After flashing the new firmware, you'll probably get some timeouts and errors, since apparently it resets the printer after giving it the new firmware, but without notifying the flashing software of it doing so. If this happens, calm down and reboot the printer and check its tiny monitor - it should work. If it doesn't work, and if you flashed the bootload yourself, try to flash it again, and if that doesn't work, try flashing the programmer again yet another time, just for kicks. Again, if you have a newer controller like the v1.1.5, don't touch the bootloader, as the one already installed, should work well as it is. | |||

PS: I tried enabling '''MANUAL_MESH_LEVELING''', which in the code says that ''If used with a 1284P board the bootscreen will be disabled to save space''. This effectively disabled the whole printer, and apparently may be making the firmware image a wee bit too large for it to fit the 1284P on the ender. It works well without it, though, and it may be fixed by now, since I tested this back in early 2019. | |||

== And then… == | |||

With the new firmware in place, the printer should work as before, although with thermal runaway protection to better avoid fires in case the shit hits the fan, and to allow for things like autolevelling. With any luck, [https://www.precisionpiezo.co.uk/ this thing] may be ready for use by the time you read this - it surely looks nice! | |||

= Octoprint/Octopi = | |||

''This could have been under some other section, but again, I just branch out even though most of it is about sections mentioned elsewhere.'' | |||

When setting up a 3d printer for the first time, you usually get an SD card for storage and transfer data to the printer using [https://en.wikipedia.org/wiki/Sneakernet Sneakernet]. Some printers use micro SD cards, while other fullsize SD cards, which imho is better, since they don't get lost that easily, but otherwise do the same thing. This works, but is somewhat tedious. If you want to control the printer from a PC or phone, there's a simple trick for that as well - octoprint. | |||

[https://octoprint.org/ Octoprint], written by Gina Häußge, runs on most platforms including linux, windows, BSD*, macos etc. It supports controlling a camera to some extent out of the box and there's a large number of plugins availablee to do a lot of things you possibly haven't thought of (and quite often, you'd ever need). The easiest way to install it, is to just grab a Raspberry pi - preferably some of the newer models (will get to that later) and download [https://octoprint.org/download/ octopi] and follow the instructions on that page. | |||

== Performance issues == | |||

Some report (and I have seen it myself) issues with the pi's performance with regards to both handling the octoprint processes (which should be as close to realtime as possible) and handling other stuff like I/O, networking etc. The point is that at pi3 or older, has all peripherals connected to an internal USB hub. This might be practical, but performance-wise it's '''''not'''''. If you in addition connect a webcam to USB, you're asking for trouble, since USB will be your bottleneck. With a raspberry pi camera, the ones connected to the pi directly with a ribbon cable, this should not use USB, so it's better. Still, again, for older pi's, there might be some shortage in therms of cpu or i/o. The octopi runs something like raspbian which is a fork of debian which is a linux distro, so it all boils down to knowing linux. | |||

Your typical octopi will run octoprint, a streamer, usually '''mjpg-streamer''', a simple, lightweight videostreamer that outputs a stream of jpeg-images (about the same format as cinemas use - that is - not MPEG). This may be a bit tough on the cpu and potentially i/o. Add inn a dash of USB overload and you're may see trouble. | |||

Still - a pi3 should be able to handle the load, but it might help to prioritise corretly. The octoprint process is the important part here, so we could give that full priority both in CPU and I/O and. Unfortunately, it doesn't seem like the current octopi has been migrated to using systemd for the startup. We'll deal with this later. To fix this in the old SysV init style file present there, the following patch should work against the file /etc/init.d/octoprint | |||

<syntaxhighlight lang="diff"> | |||

--- octoprint.old 2020-07-20 18:46:10.166651965 +0200 | |||

+++ octoprint 2020-07-20 18:53:21.724803211 +0200 | |||

@@ -22,6 +22,9 @@ | |||

PIDFILE=/var/run/$NAME.pid | |||

PKGNAME=octoprint | |||

SCRIPTNAME=/etc/init.d/$PKGNAME | |||

+NICELEVEL=-19 # Top CPU priority | |||

+IONICE_CLASS=1 # Realtime I/O class | |||

+IONICE_PRIORITY=0 # Realtime I/O priority (probably not needed with class=realtime) | |||

# Read configuration variable file if it is present | |||

[ -r /etc/default/$PKGNAME ] && . /etc/default/$PKGNAME | |||

@@ -70,10 +73,11 @@ | |||

is_alive $PIDFILE | |||

RETVAL="$?" | |||

- | |||

if [ $RETVAL != 0 ]; then | |||

start-stop-daemon --start --background --quiet --pidfile $PIDFILE --make-pidfile \ | |||

- --exec $DAEMON --chuid $OCTOPRINT_USER --user $OCTOPRINT_USER --umask $UMASK -- serve $DAEMON_ARGS | |||

+ --exec $DAEMON --chuid $OCTOPRINT_USER --user $OCTOPRINT_USER --umask $UMASK \ | |||

+ --nicelevel $NICELEVEL --ioched $IONICE_CLASS:$IONICE_PRIORITY \ | |||

+ --serve $DAEMON_ARGS | |||

RETVAL="$?" | |||

fi | |||

} | |||

</syntaxhighlight> | |||

This ismply upgrades the process to be allowed to use most of the resources on the pi, regardless of what other processes are whining about. | |||

PS: Code not tested - I don't have a pi right here. Will test later, but I'm pretty sure it'll work. After all, it's just a few new arguments to start-stop-daemon | |||

roy | |||

Latest revision as of 18:53, 17 November 2020

Page move

After borrowing space on Malin's wiki for some years, further edits will be done on the my new wiki so that next time the wiki goes down after something strange happened, she won't get a ton of mesages from me, but I'll have to fix it myself - oh well ;)

LVM, md and friends

Linux' Logical Volume Manager (LVM)

LVM in general

LVM is designed to be an abstraction layer on top of physical drives or RAID, typically mdraid or fakeraid. Keep in mind that fakeraid should be avoided unless you really need it, like in conjunction with dual-booting linux and windows on fakeraid. LVM broadly consists of three elements, the "physical" devices (PV), the volume group (VG) and the logical volume (LV). There can be multiple PVs, VGs and LVs, depending on requirement. More about this below. All commands are given as examples, and all of them can be fine-tuned using extra flags in need of so. In my experience, the defaults work well for most usecases.

I'm mentioning filesystem below too. Where I write ext4, the samme applies to ext2 and ext3.

Create a PV

A PV is the "physical" parts. This does not need to be a physical disk, but can also be another RAID, be it an mdraid, fakeraid, hardware raid, a virtual disk on a SAN or otherwisee or a partition on one of those.

# These add three PVs, one on a drive (or hardware raid) and another two on mdraids.

pvcreate /dev/sdb

pvcreate /dev/md1 /dev/md2

For more information about pvcreate, see the manual.

Create a VG

The volume group consists of one or more PVs grouped together on which LVs can be placed. If several PVs are grouped in a VG, it's generally a good idea to make sure these PVs have some sort of redundancy, as in mdraid/fakeraid or hwraid. Otherwise it will be like using a RAID-0 with a single point of failure on each of the independant drives. LVM has RAID code in it as well, so you can use that. I haven't done so myself, as I generally stick to mraid. The reason is mdraid is, in my opinion older and more stable and has more users (meaning bugs are reported and fixed faster whenever they are found). That said, I beleive the actual RAID code used in LVM RAID are the same function calls as for mdraid, so it may not be much of a difference. I still stick with mdraid. To create a VG, run

# Create volume group my_vg

vgcreate my_vg /dev/md1

Note that if vgcreate is run with a PV (as /dev/md1 above) that is not defined as a PV (like above), this is done implicitly, so if you don't need any special flags to pvcreate, you can simply skip it and let vgcreate do that for you.

Create an LV

LVs can be compared to partitions, somehow, since they are bounderies of a fraction or all of that of a VG. The difference between them and a partition, however, is that they can be grown or shrunk easily and also moved around between PVs without downtime. This flexibility makes them superiour to partitions as your system can be changed without users noticing it. By default, an LV is alloated "thickly", meaning all the data given to it, is allocated from the VG and thus the PV. The following makes a 100GB LV named "thicklv". When making an LV, I usually allocate what's needed plus some more, but not everything, just to make sure it's space available for growth on any of the LVs on the VG, or new LVs.

# Create a thick provisioned LV named thicklv on the VG my_vg

lvcreate -n thicklv -L 100G my_vg

After the LV is created, a filesystem can be placed on it unless it is meant to be used directly. The application for direct use include swap space, VM storage and certain database systems. Most of these will, however, work on filesystems too, although my testing has shown that on swap space, there is a significant performance gain for using dedicated storage without a filesystem. As for filesystems, most Linux users use either ext4 or xfs. Personally, I generally use XFS these days. See my notes below on filesystem choice.

# Create a filesystem on the LV - this could have been mkfs -t ext4 or whatever filesystem you choose

mkfs -t xfs /dev/my_vg/thicklv

Then just edit /etc/fstab with correct data and run mount -a, and you should be all set.

Create LVM cache

LVMcache is LVM's solution to caching a slowish filesystem on spinning rust with the help of much faster SSDs. For this to work, use a separate SSD or a mirror or two (just in case) an add the new disk/md dev to the same VG. In this case, we use a small mirror, md1. LVM is not able to cache to a disk or partition outside the volume group.

vgextend data /dev/md1

Physical volume "/dev/md1" successfully created.

Volume group "data" successfully extended

Thn create two LVs, one for the data (contents of files) and one for the metadata (filenames, directories, attributes etc). Typically, the metadata part won't need to be very large. 100M should usually do unless you have ekstremely many small files. I guess there are ways to calculate it, but again, 1GB should do for everyone (or was that 640k?). As for the data cache, I chose to use 40G here for a 15TB RAID. It will only cache what actively use anyway, so no need for overkill.

lvcreate -L 1G -n _cache_meta data /dev/md1

lvcreate -L 100G -n _cache data /dev/md1

# Some would want --cachemode writeback, but if paranoid, I wouldn't recommend it. Metadata or data can be easily corrupted in case of failure. Most filesystems will however recover from this these days since they use journaling. It ''really'' speeds up things.

lvconvert --type cache-pool --cachemode writethrough --poolmetadata data/_cache_meta data/_cache

lvconvert --type cache --cachepool data/_cache data/data

That should be it. If you benchmarked the disk before this, you should try again and you'll probably get a nice surprise. If not, well, see below how to turn this off again :)

Disabling LVM cache

If you want to change the cached LV, such as growing og shrinking or otherwise, you'll have to disable caching first and then re-enable it. There's no quick-and-easy fix, but you just do as you did when enabling in the first place.

Forst, disable caching for the LV. This will do it cleanly and remove the LVs earlier created for caching the main device.

lvconvert --uncache data/data

Then, since you now have a VG with an empty PV in it, earlier used for caching, I'd recommend removing this for now to avoid expanding onto that as well. Since they're in the same VG, this might happen and if you get so far as to extend the lv and the filesystem on it, and this filesystem is XFS or similar filesystems without possibilities of reducing the size, you have a wee problem.

Just remove that PV from the VG

vgreduce data /dev/md1

The PV is still there, but pvs should now show it not connected to a VG

Growing devices

LVM objects can be grown and shrunk. If a PV resides on a RAID where a new drive has been added or otherwise grown, or on a partition or virtual disk that has been extended, the PV must be updated to reflect these changes. The following command will grow the PV to the maximum available on the underlying storage.

# Resize the PV /dev/md (not the RAID, only the PV on the RAID) to its full size

pvresize /dev/md1

If a new PV is added, the VG can be grown to add the space on that in addition to what's there already.

# Extend my_vg to include md2 and its space

vgextend my_vg /dev/md2

With more space available in the VG, the LV can now be extended. Let's add another 50GB to it.

# Extend thicklv - add 50GB. If you know you won't need the space anywhere else, you may want to

# lvresize -l+100%FREE instead. Keep in mind the difference between -l (extents) and -L (bytes)

lvresize -L +50G my_vg/thicklv

Growing filesystems

After the LV has grown, run xfs_growfs (xfs) or resize2fs (ext4) to make use of the new data. To grow the filesystem to all available space on ext4, run the below command. To partly grow the filesystem or to shrink it or otherwise, please see the manuals.

The filesystem can be mounted when this is run. If you for some reason get an access denied error when running this as root or with sudo, it's likely caused by a filesystem error. To fix this, unmount the filesystem first and run fsck -f /dev/my_vg/thicklv and remount it before retrying to extend it.

resize2fs /dev/my_vg/thicklv

Similarly, with XFS, but note that xfs_growfs doesn't take the device name, but the filesystem's mount point. In the case of XFS, also note that the filesystem must be mounted to be grown. This, in contrast to how it was in the old days when filesystems had to be unmounted to be grown.

xfs_growfs /my_mountpoint

Rename system VG

Some distros create VG names like those-from-a-sysadmin-with-a-well-developed-paranoia-not-to-hit-a-duplicate. Debian, for instance, uses names like "my-full-hostname-vg". Personally, I don't like these, since if I were to move a disk or vdisk around to somewhere else, I'd make sure not to add a disk named 'sys' to an existing system. If you do, most distros will refuse to use the VGs with duplicate names, resulting in the OS not booting until either one is specified by its UUID or just one removed. As I'm aware of this, I stick to the super-risky thing of all system VGs named 'sys' and so on.

If you do this, keep in mind that it may blow up your computer and take your girl- or boyfriend with it or even make either of them pregnant, allowing Trump to rule your life and generally make things suck. You're on your own!

# Rename the VG

vgrename my-full-hostname-vg sys

Now, in /boot/grub/grub.cfg, change the old lv path from something like '/dev/mapper/debian--stretch--machine--vg-root' to your new and fancy '/dev/sys/root. Likewise, in /etc/fstab, change occurences of '/dev/mapper/debian--stretch--machine--vg-' (or similar) with '/dev/sys/'. Here, this was like this - the old ones commented out.

#/dev/mapper/debian--stretch--box--vg-root / ext4 errors=remount-ro,discard 0 1

/dev/sys/root / ext4 errors=remount-ro,discard 0 1

# /boot was on /dev/vda1 during installation

UUID=myverylonguuidwithatonofgoodinfoinit /boot ext2 defaults 0 2

#/dev/mapper/debian--stretch--box--vg-swap_1 none swap sw 0 0

/dev/sys/swap_1 none swap sw 0 0Update /etc/initramfs-tools/conf.d/resume with similar data for swap.

You might want to run 'update-initramfs -u -k all' after this, but I'm not sure if it's needed.

Now, pray to the nearest god(s) and give it a reboot and it should (probably) work.

After reboot, run swapoff -a, then mkswap /dev/sys/swap_1 (or whatever it's called on your system) and swapon -a again. You may want to give it another reboot or two for kicks, but it should work well even without that.

Migration tools

At times, storage regimes change, new storage is added and sometimes it's not easy to migrate with the current hardware or its support systems. Once I had to migrate a 45TiB fileserver from one storage system to another, preferably without downtime. The server originally had three 15TiB PVs of which two were full and the third half full. I resorted to using pvmove to just move the data on the PVs in use to a new PV. We started out with creating a new 50TiB PV, sde, and then to pvmove.

# Attach /dev/sde to the VG my_vg

vgextend my_vg /dev/sde

# Move the contents from /dev/sdb over to /dev/sde block by block. If a target is not given in pvmove,

# the contents of the source (sdb here) will be put somewhere else in the pool.

pvmove /dev/sdb /dev/sde

This took a while (as in a week or so) - the pvmove command uses old code and logic (or at least did that when I migrated this in November 2016), but it affected performance on the server very little, so users didn't notice. After the first PV was migrated, I continued with the other two, one after the other, and after a month or so, it was migrated. We used this on a number of large fileservers, and it worked flawlessly. Before doing the production servers I also tried interrupting pvmove in various ways, including hard resets, and it just kept on after the reboot.

NOTE: Even though it worked well for us, always keep a good backup before doing this. Things may go wrong, and without a good backup, you may be looking for a hard-to-find new job the next morning.

mdadm workarounds

Migrate from a mirror (raid-1) to raid-10

Create a mirror

mdadm --create /dev/md0 --level=1 --raid-devices=2 /dev/sdb /dev/sdc

LVM (pv/vg/lv) on md0 (see above), put a filesystem on the lv and fill it with some data.

Now, some months later, you want to change this to raid-10 to allow for the same amount of redundancy, but to allow more disks into the system.

mdadm --grow /dev/md0 --level=10 mdadm: Impossibly level change request for RAID1

So - no - doesn't work. But - we're on

Since we're on lvm already, this'll be easy. If you're using filesystems directly on partitions, you can do this the same way, but without the pvmove part, using rsync or whateever you like instead. I'd recommend using lvm for for the new raid, which should be rather obvious from this article. Now plug in a new drive and create a new raid10 on that one. If you two new drives, install both.

# change "missing" to the other device name if you installed two new drives

mdadm --create /dev/md1 --level=10 --raid-devices=2 /dev/vdd missing

Now, as described above, just vgextend the vg, adding the new raid and run pvmove to move the data from the old pv (residing on the old raid1) to the new pv (on raid10). Afte rpvmove is finished (which may take awile, see above), just

vgreduce raidtest /dev/md0

pvremove /dev/md0

mdadm --stop /dev/md0

…and your disk is free to be added to the new array. If the new raid is running in degraded mode (if you created it with just one drive), better don't wait too long, since you don't have redundancy. Just mdadm --add the devs.

If you now have /dev/mdstat telling you your raid10 is active and have three drives, of which one is a spare, it should look something like this

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md1 : active raid10 vdc[3](S) vdb[2] vdd[0]

8380416 blocks super 1.2 2 near-copies [2/2] [UU]

Just mdadm --grow --raid-devices=3 /dev/md1

RAID section is not complete. I'll write more on migraing from raid10 to raid6 later. It's not straight forward, but easier than this one :)

Thin provisioned LVs

Thin provisioning on LVM is a method used for not allocating all the space given to an LV. For instance, if you have a 1TB VG and want to give an LV 500GB, although it currently only uses a fraction of that, a thin lv can be a good alternative. This will allow for adding a limit to how much it can use, but also to let it grow dynamically without manual work by the sysadmin. Thin provisioning adds another layer of abstaction by creating a special LV as a thin pool from which data is allocated to the thin volume(s)

Create a thin pool

Allocate 1TB to the thin pool to be used for thinly provisioned LVs. Keep in mind that the space allocated to the thin pool is in fact thick provisioned. Only the volumes put on thinpool are thin provisioned. This will create two hidden LVs, one for data and one for metadata. Normally the defaults will do, but check the manual if the data doesn't match the usual patterns (such as billions of files, resulting in huge amounts of metadata). I beleive the metadata part should be resizable at a later time if needed, but I have not tested it.

# Create a pool for thin LVs. Keep in mind that the pool itself is not thin provisioned, only the volumes residing on it

lvcreate --size 1T --type thin-pool --thinpool thinpool my_vg

Create a thin volume

You have the thinpool, now put a thin volume on it. It will allocate some space for metadata, but probably not much (a few megs, perhaps).

# Now, create a volume with a virtual (-V) size of half a terabyte named thin_pool, but using the thinpool (-T) named thin_pool for storage

lvcreate -V 500G -T my_vg/thin_pool --name thinvol

The new volume's device name will be /dev/thinvol. Now, create a filesystem on it, add to fstab and mount it. The df command will return available space according to the virtual size (-V), while lvs will show how much data is actually used on each of the thinly provisioned volumes.

Filesystem dilemmas

XFS or ext4 or something else

The choice of filesystem varies for your use. Distros such as RHEL/CentOS has moved to XFS by default from v7. Debian/Ubuntu still sticks to ext4, like most other. I'll discuss my thoughts below on ext4 vs XFS and leave the other filesystems for later.

ext4

ext4 is probably the most used filesystem on linux as of writing. It's rock stable and has been extended by a lot of extensions since the original ext2. It still retains backward compatibility, being able to mount and fsck ext2 and ext3 with the ext4 driver. However, it doesn't handle large filesystems very well. If a filesystem is created for <16TiB, it cannot be grown to anything larger than 16TiB. This may have changed lately, though. One other issue is handling errors. If a large filesystem (say 10TiB) is damaged, an fsck may take several hours, leading to long downtime. When I refer to ext4 further in this text, the same applies to ext2 and ext3 unless otherwise specified.

XFS

XFS, originally developed by SGI some time in the bronze age, but has been worked on heavily after that. RHEL and Centos version 7 and forward, uses XFS as the default filesystem. It is quick, it scales well, but it lacks at least two things ext4 has. It cannot be shrunk (not that you normally need that, but nice if you have a typo or you need to change things). Also, it doesn't allow for automatic fsck on bootup. If something is messed up on the filesystem, you'll have to login as root on the console (not ssh), which might be an issue on some systems. The fsck equivalent, xfs_repair, is a *lot* faster than ext4's fsck, though.

ZFS

ZFS is like a combination of RAID, LVM and a filesystem, supporting full checksumming, auto-healing (given sufficient redundancy), compression, encryption, replication, deduplication (if you're brave and have a ton of memory) and a lot more. It's a separate chapter and needs a lot more talk than paragraph here.

btrfs

btrfs, pronounced "butterfs" or "betterfs" or just spelled out, is a filesystem that mimics that of ZFS. It has been around for 10 years or so, but hasn't yet proven to be really stable. Following the progress of it for those years, I've found it to be a nice toy with which to play, but not to be used in production. Again, others may say otherwise, but these are just my words.

Low level disk handling

This section is for low-level handling of disks, regardless of storage system elsewhere. These apply to mdraid, lvmraid and zfs and possibly other solutions, with the exception of individual drives on hwraid (and maybe fakeraid), since there, the drives are hidden from Linux (or whatever OS).

S.M.A.R.T. and slow drives

Modern (that is, from about 1990 or later) have S.M.A.R.T. (or just smart, since it's easier to type) monitoring built in, meaning the disk's controller is monitoring itself. This is handy and will ideally tell you in advance that a drive is flakey or dying. Modern (2010+) smart monitoring is more or less the same. It can be trusted about 50% of the time. Usually, if smart detects an error, it's a real error. I don't think I've seen it reporting a false positive, but others may know better.

smartmontools